- Home

Papers

Standard methods for measuring ideology from voting records assume that individuals at the ideological ends should never vote together in opposition to moderates. In practice, however, there are many times when individuals from both extremes vote identically but for opposing reasons. Both liberal and conservative justices may dissent from the same Supreme Court decision but provide ideologically contradictory rationales. In legislative settings, ideological opposites may join together to oppose moderate legislation in pursuit of antithetical goals. We introduce a scaling model that accommodates ends against the middle voting and provide a novel estimation approach that improves upon existing routines. We apply this method to voting data from the United States Supreme Court and Congress and show it outperforms standard methods in terms of both congruence with qualitative insights and model fit. We argue our proposed method represents a superior default approach for generating one-dimensional ideological estimates in many important settings.

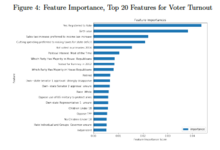

Machine learning is becoming increasingly prevalent in political science research. Improving the accuracy of outcomes, refining measurements of complex processes, addressing non-linearities in data and introducing new kinds of data may be achieved using machine learning. Despite the possible uses of machine learning, a clear understanding of how to use these tools and their pitfalls is still needed. This article provides a foundational guide to machine learning and illustrates how these methods can advance political science research. We address the pitfalls of these methods as well as the specific concerns for using machine learning with social data. Finally, we demonstrate how machine learning can help understand voter turnout through an application of methods with survey data on the 2016 election.

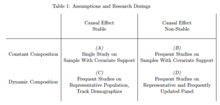

The "credibility revolution" has forced social scientists to confront the limits of our methods for creating general knowledge. The current approach aims to aggregate valid but local knowledge. At the same time, the increasing centrality of the internet to political and social processes has rendered untenable the implicit ceteris paribus assumptions necessary for aggregating knowledge produced at dierent times. The interaction of these two trends is not yet well understood. I argue that a high rate of change of the objects of our study makes "knowledge decay" a potentially large source of error. "Temporal validity" is a form of external validity in which the target setting is in the future|which, of course, is always the case. A crucial distinction between cross-sectional external validity and temporal validity is that the latter implies a fundamental incompleteness of social science that renders the project of non-parametric knowledge aggregation impossible. I discuss the limitations of extant strategies for knowledge aggregation through the lens of temporal validity, and propose strategies for improving practice.

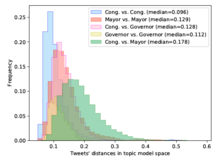

One of the defining characteristics of modern politics in the United States is the increasing nationalization of elite- and voter-level behavior. Relying on measures of electoral vote shares, previous research has found evidence indicating a significant amount of state-level nationalization. Using an alternative source of data -- the political rhetoric used by mayors, state governors, and Members of Congress on Twitter -- we examine and compare the amount of between-office nationalization throughout the federal system. We find that gubernatorial rhetoric closely matches that of Members of Congress but that there are substantial differences in the topics and content of mayoral speech. These results suggest that, on average, American mayors have largely remained focused on their local mandate. More broadly, our findings suggest a limit to which American politics has become nationalized -- in some cases, all politics remains local.

The significance and influence of US Supreme Court majority opinions derive in large part from opinions’ roles as precedents for future opinions. A growing body of literature seeks to understand what drives the use of opinions as precedents through the study of Supreme Court case citation patterns. We raise two limitations of existing work on Supreme Court citations. First, dyadic citations are typically aggregated to the case level before they are analyzed. Second, citations are treated as if they arise independently. We present a methodology for studying citations between Supreme Court opinions at the dyadic level, as a network, that overcomes these limitations. This methodology—the citation exponential random graph model—enables researchers to account for the effects of case characteristics and complex forms of network dependence in citation formation. We then analyze a network that includes all Supreme Court cases decided between 1950 and 2015. We find evidence for dependence processes, including reciprocity, transitivity, and popularity. The dependence effects are as substantively and statistically significant as the effects of the exogenous covariates we include in the model, indicating that models of Supreme Court citation should incorporate both the effects of case characteristics and the structure of past citations.

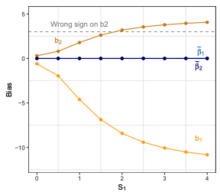

We offer methods to analyze the "differentially private" Facebook URLs Dataset which, at over 10 trillion cell values, is one of the largest social science research datasets ever constructed. The version of differential privacy used in the URLs dataset has specially calibrated random noise added, which provides mathematical guarantees for the privacy of individual research subjects while still making it possible to learn about aggregate patterns of interest to social scientists. Unfortunately, random noise creates measurement error which induces statistical bias -- including attenuation, exaggeration, switched signs, or incorrect uncertainty estimates. We adapt methods developed to correct for naturally occurring measurement error, with special attention to computational efficiency for large datasets. The result is statistically consistent and approximately unbiased linear regression estimates and descriptive statistics that can be interpreted as ordinary analyses of non-confidential data but with appropriately larger standard errors.

We have implemented these methods in open source software for R called PrivacyUnbiased. Facebook has ported PrivacyUnbiased to open source Python code called svinfer.